Today’s systems are inundated with data. Each service, and indeed server and application produce logs, some telling more or less of a story. But when those logs remain scattered in disparate locations, making sense of what’s really going on begins to get tough. Log aggregation is popular for this very reason. It’s like drawing all logs in a single location, except you can actually search for them − something that may sound simple, but has the potential to make queries faster and clearer (and far more useful).

Why You Can’t Afford Not to Log Aggregate

With the advancement of cloud-native and distributed settings, the amounts of logs have grown substantially. Classic approaches of going through the logs one by one become useless. Bugs fall through the cracks, and teams lose hours switching from system to system.

Log aggregation solves this problem by aggregating logs from all sources. Whether it’s a microservice, container, or virtual machine − all logs land at one place consistently. This serves to form a holistic view of the behavior of the system as opposed to isolated snippets.

A single source of truth gives teams control and takes the guesswork out of incidents.

Realizing the Advantages of Centralized Logging

Everything gets easier from the point organizations implement log aggregation. You can see things better and troubleshooting is much easier.

The top benefits include:

- Faster root-cause detection

- Continuity of events between systems

- Better monitoring accuracy

- More reliable performance analysis

Logs can be arranged side-by-side, patterns start to stand out quickly, and small issues are captured before they have a chance to grow into anything more serious.

How Log Aggregation Improves Troubleshooting

One of the hardest things about managing distributed systems is tracing problems as they hop from one service to another. Without a single view, teams have to gather and interpret clues from all over.

Log aggregation eliminates that hassle. With logs in a single timeline, it’s easy to see errors from beginning to end. Every log entry adds some color to the complete picture, and teams are able to not just see what happened but why it occurred.

This full optimized process saves time during such critical moments when everything is down to the wire.

What is a Good Log Aggregation Architecture?

In order for log aggregation to be successful we need a nice organization of data. Even the best platforms in the world won’t do you any good if your logs are inconsistent and drowned in noise.

A strong setup includes:

- Standardized timestamps

- Structured log formats

- Central hub with strong search capabilities

- Filtering rules which minimize superfluous entries

- Clear tagging and indexing practices

Logs that follow a predictable pattern are easier to extract insights and automate from.

Designing a Log Aggregation that Actually Works

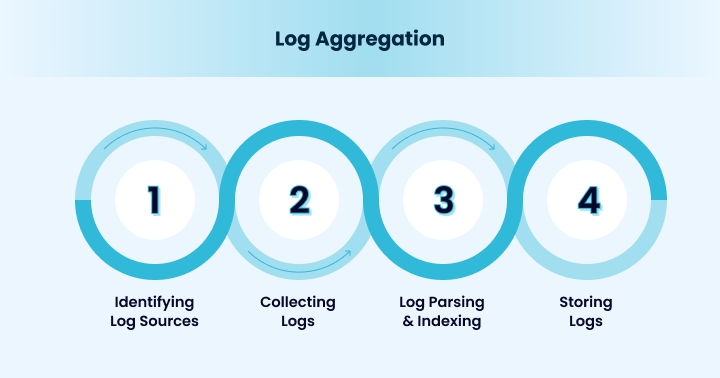

Setting up a good log aggregation system is something you have to plan. Teams should begin by understanding where logs come from and how often they are created.

A successful strategy includes:

- Selecting an appropriate tool for big data

- Defining log retention policies

- Ensuring logs remain structured

- Applying filters for elimination of unrelated information

- These results are reviewed for aggregation day by day to increase the accuracy

These are necessary actions to continue high-quality logging of data. The aggregated data is always useful.

Final Thoughts

Log aggregating is a highly effective habit in managing intricate digital properties. Centralizing logs in one place turns disparate data into actionable insights. Teams pick up speed, accuracy and confidence in their systems. And as our technology continues to evolve, log aggregation will always be a critical part of effectively keeping the lights on.